The great wonderful world of Google Analytics Referral Spam & Bot Traffic – the most recent in a long tradition sending irrelevant or inappropriate messages on the Internet to a large number of people. This guide outlines why it matters, what’s going on, and most importantly, exactly what to do about it (skip to that section here).

EDIT 12-15-2016: Google has not developed a global solution. In fact, GA spam is worse than ever. And with the latest wave of spam in November, the most reliable methods are not effective. Your best bet is to keep “layering” all the defenses that I mention below. Additionally, keep learning what you can do with Analytics, and look carefully for spoofed traffic.

EDIT 7-24-2015: Google has announced that they are looking into a global solution. Additionally, Analytics Edge has released a plug and play Advanced Segment for Google Analytics that implements most of what I explore below.

Either way, if you want to understand what is going on with referral spam, keep reading!

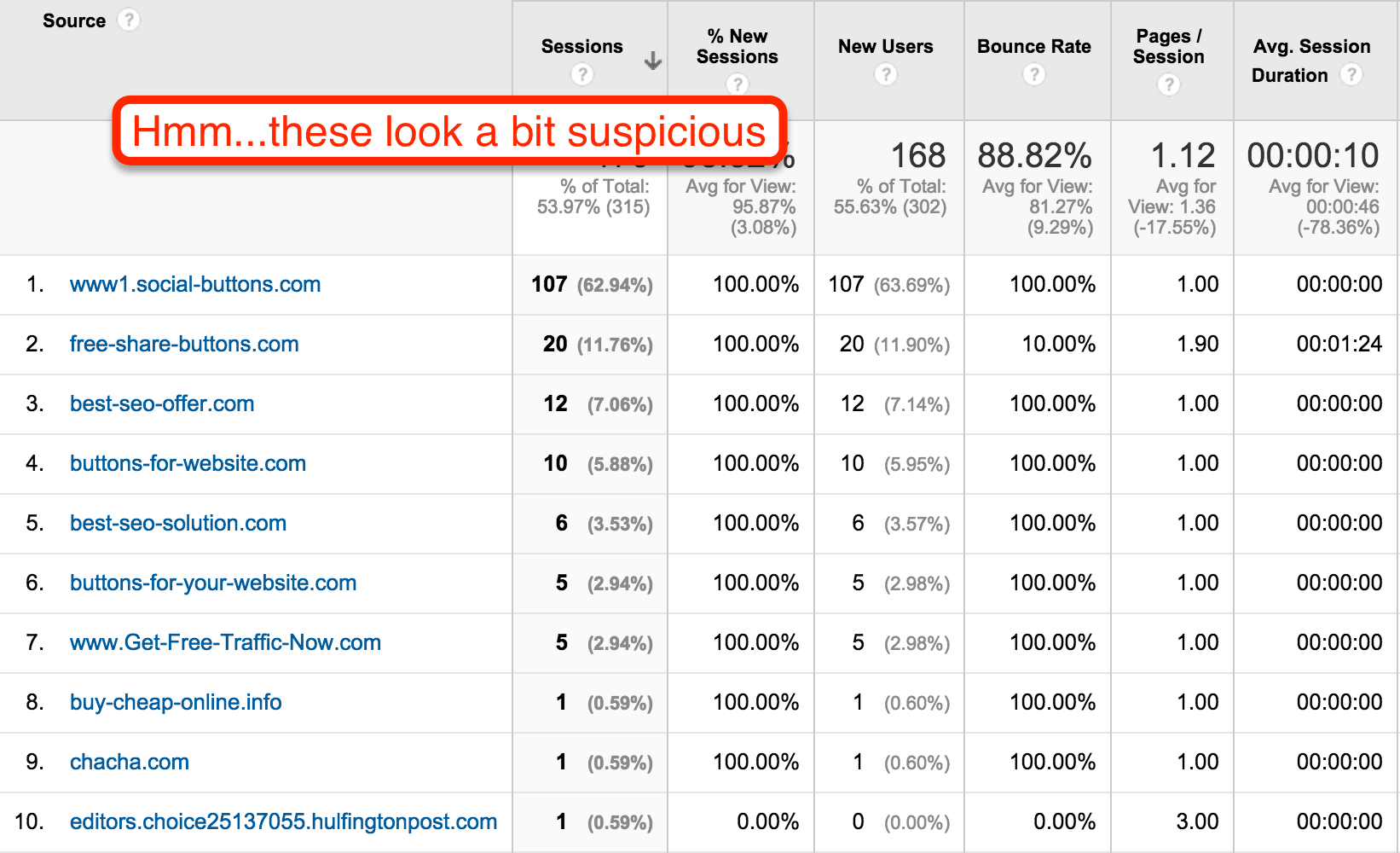

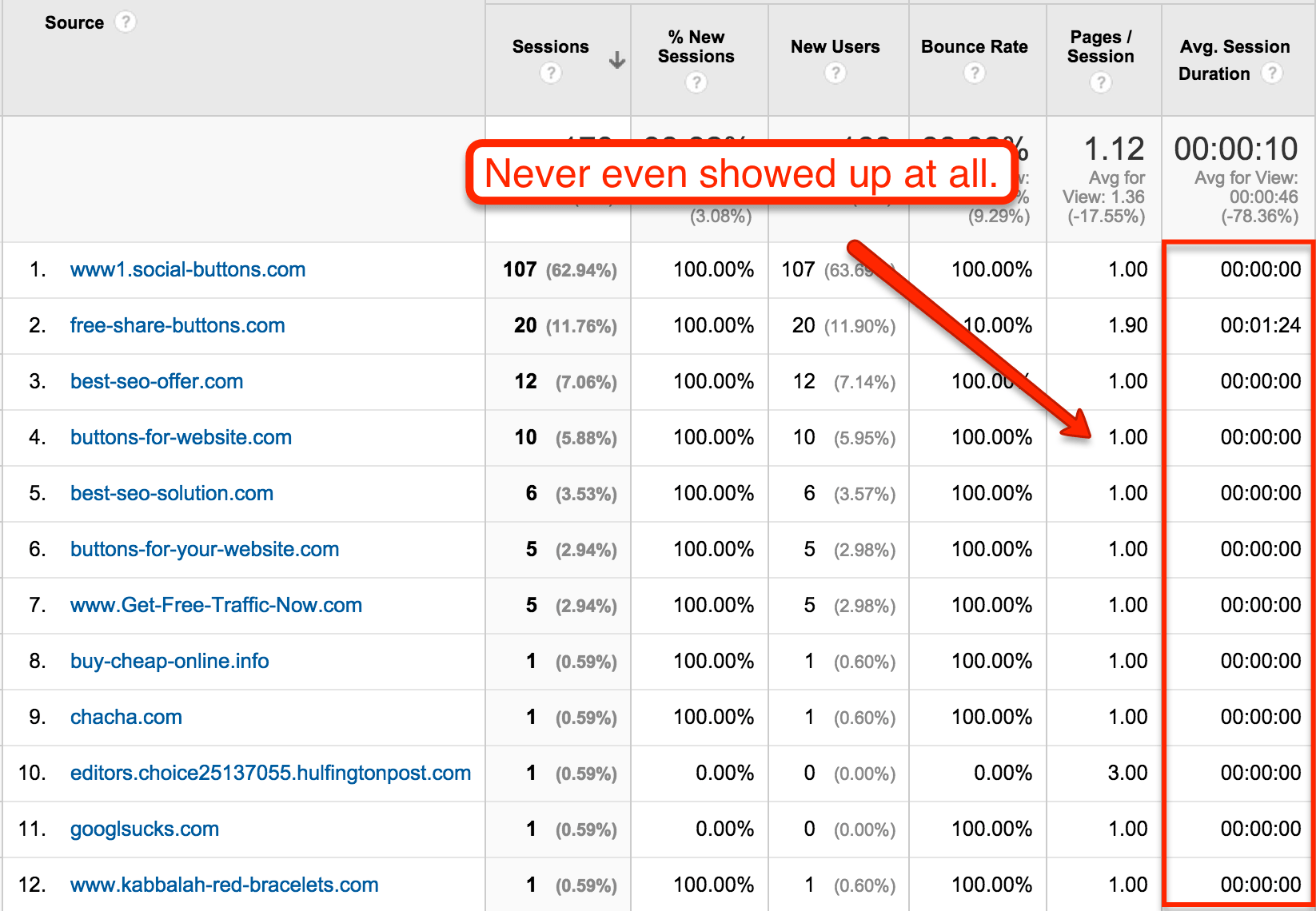

You’ve probably recently logged into your Google Analytics account and seen odd domains like darodar.com, buttons-for-website.com, hulfingtonpost, and countless others in your referral report.

Or, you’ve checked your organic keyword report and seen “ilovevitaly SEM”

Or, you’ve seen a giant spike in direct/(none) traffic that spikes one day then goes away.

Or, you’ve seen visits to URLs that are not anywhere on your site (not even hacked pages).

Why Filtering Spam Matters

There are 2 types of spam that ends up in your analytics profiles –

First – bots do not visit your website. I call them “ghost bots.” Ghost bots are pure spam in the same vein as email spam, comment spam, and flyers under your car windshield. They mostly appear as Google Analytics referral spam, but never visit your website.

And second – bots that do visit your website. I call them “zombie bots.” Zombie bots generally produce analytics spam as a by-product of their various purposes. They do visit and fully render your website, and trigger your analytics code as an after effect.

The difference is key to understand why they happen and how to stop them, but the effect is the same.

They both skew your data and pollute your website analytics. This leads to bad interpretations and bad marketing decisions.

Remember that analytics does more than count visits, it tells a full story of what is going on with your business online.

Even bots that only affect referral traffic skew the share of traffic from each medium. This also discounts the share of each medium’s visits. They affect your engagement numbers, by skewing towards higher bounce rates and shorter durations. They decrease conversion rates since bots never buy anything or submit a lead.

You can’t just mentally discount them or treat them as nuisances. You have to do something about them without negative side effects, such as damaging website performance or excluding false positives in analytics.

Who Does It & Why It’s Happening

I’ve written about comment spam and the people behind spam, and analytics spam is not too different.

Ghost bots are either people taking advantage of a nearly-free way to get in front of an audience or annoying digital graffiti artists.

Zombie bots are poorly or nefariously designed bots. Bots as a general rule are good and are a part of the infrastructure of the web. Googlebot is the most famous of course, but there are plenty of others that serve useful purposes. Web scraping is usually an introductory project in web development courses. Zombie bots, though, do not declare themselves as bots and will fully render a web page – analytics javascript and all.

Sometimes bots build a fraudulent ad network. Sometimes it’s for business intelligence. And sometimes it’s a computer science project gone awry. And sometime, it’s simply to troll the entire marketing industry (as was the case with Vitaly’s attack in November 2016).

Either way, they leave a trail of spam in their wake and will always be around in some form.

How It Works & What To Do About It

There is no universal solution to all bots (without Google’s help), but there are a few things you can do to clean up your analytics.

Aside: There is a lot of bad advice around on this issue. Using the Referral Exclusion under the Property is not recommended to filter spam because:

- It’s not a universal solution.

- It’s not particularly accurate.

- It can just shift the visit to a (none)/Direct visit.

- It doesn’t allow you to check false positives with historical data.

There’s a lot of sites (including very reputable ones) recommending server-side technical changes such as .htaccess edits. That’s also a bad idea.

Lastly, the Google Analytics checkbox to “Filter Known Bots & Spiders” does not work against ghost and zombie bots.

Here’s what to do in order to eliminate most all of analytics spam without risking your unfiltered data, filtering false positives or creating unsustainable server changes.

We’re going to create a separate view with a filter so you can have clean(ish) data from now on. We’ll create an advanced segment so that you can look at your historical view in a clean way.

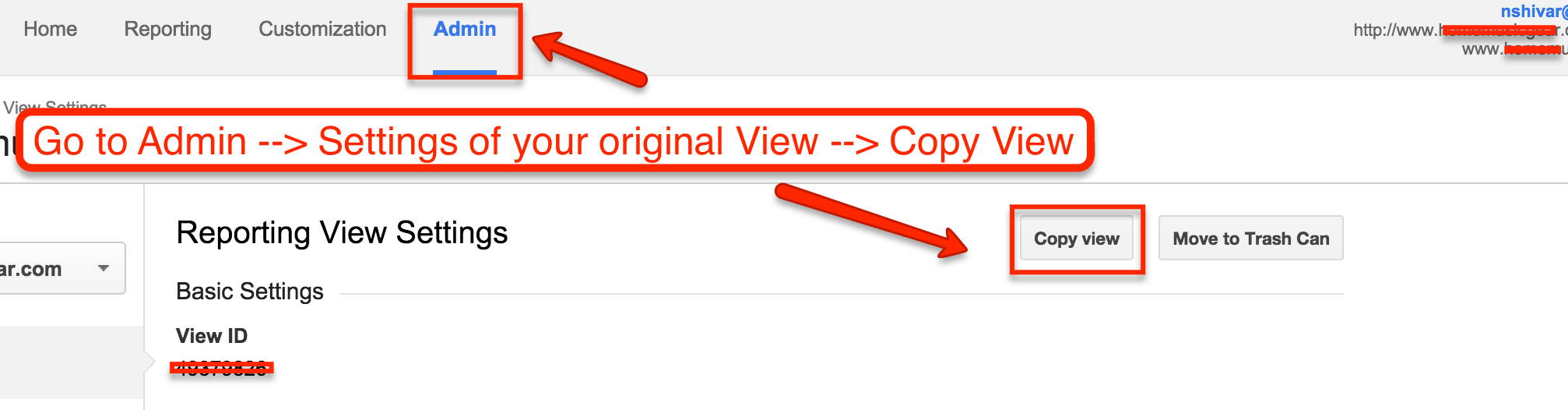

But start by creating a new view to the one you currently have in analytics. You always want to preserve one view that has 100% unfiltered data so that you have historical data and something to make sure you’re not excluding false positives.

From your view’s dashboard, go to Admin, then go to settings, then Create Copy.

Name it something like 2 – [www.yourwebsite.com] // Bot Exclusion View.

We’ll now use this view to filter out all bot traffic. It will have no historical data at first, but will as time goes on. After setting up this view, we’ll set up an advanced segment to apply to the primary profile.

Filtering Ghost Bots

Ghost referrers are sessions showing up in analytics that never actually happened. The bot never requested any files from your server. It sent whatever data it wanted to send directly to your Google Analytics account by firing the analytics code with a random UA code. If you want to geek out – it’s something that can be done via the measurement protocol or simply remotely firing the Google Analytics code. Normally, it’s a way to input offline data into GA, but is also easily abused.

The point is that your server cannot block or filter them because they never show up to your server in the first place.

You also cannot filter them as they show up in analytics because they change domain name variations frequently.

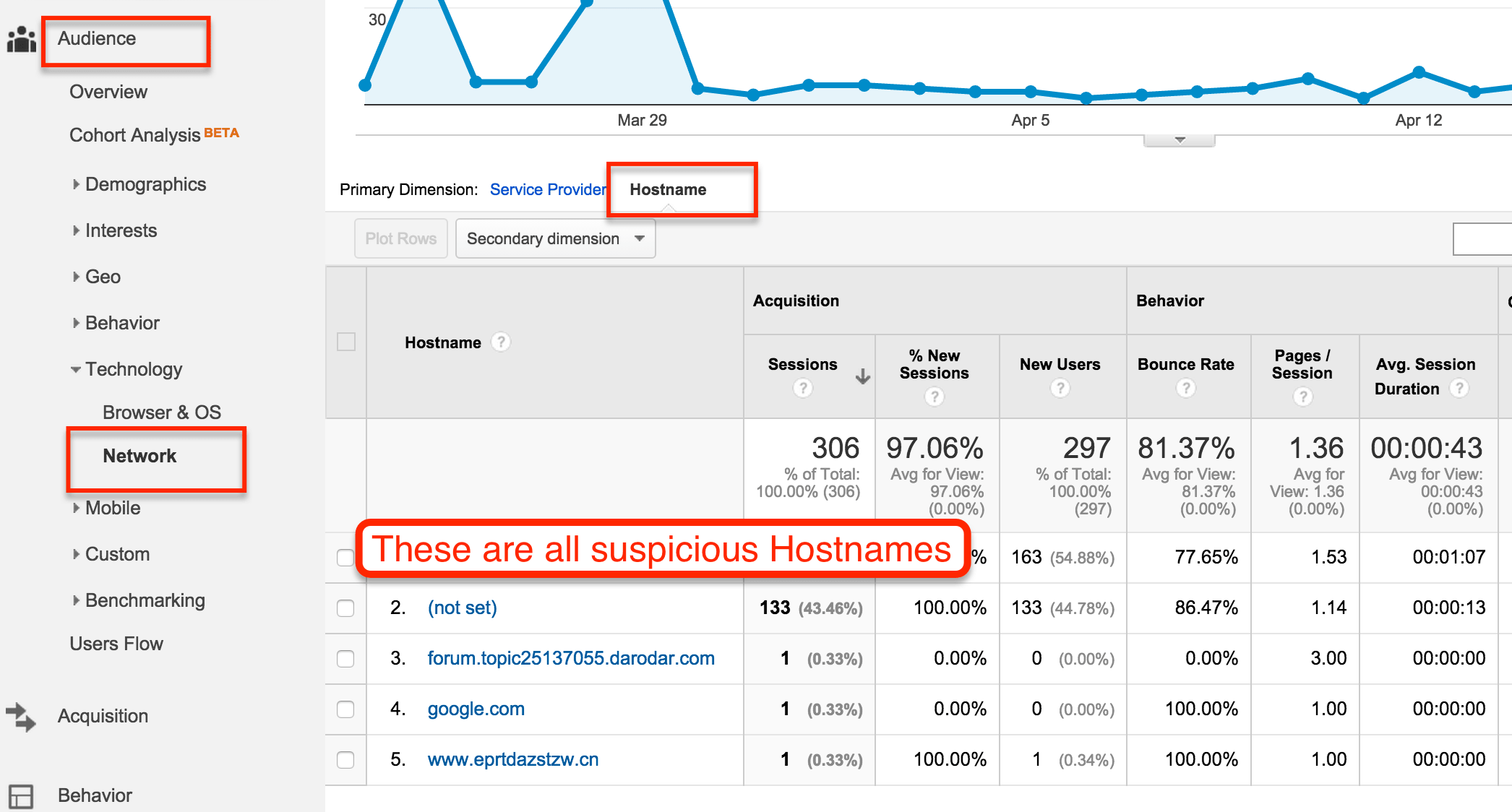

The solution is to filter by Hostname. In your reporting interface of your historic view, navigate to Audience → Technology → Network → select Hostname as primary dimension. Be sure to specify at least the last year as your date range.

Hostname is the “The full domain name of the page requested.” For most ghost bots, this dimension is hard to fake since they are randomly calling UA codes, not actually visiting sites.

Go to your historic view hostname report and set the date range as far back as possible. You should find visits on your domain, translate.google.com, maybe web.archive.org. If you are an ecommerce store, your payment processor domain name will also be present. Everything else is probably spam, especially (not set) and hostnames that you know are not serving your content.

Take a note of all the valid hostnames. And you’ll write a regex to include only the valid ones. A typical one would be:

yourwebsite.com|translate.google.com|archive.org

This regex will capture all subdomains on my main domain and anytime someone loaded my site within Google Translate or archive.org.

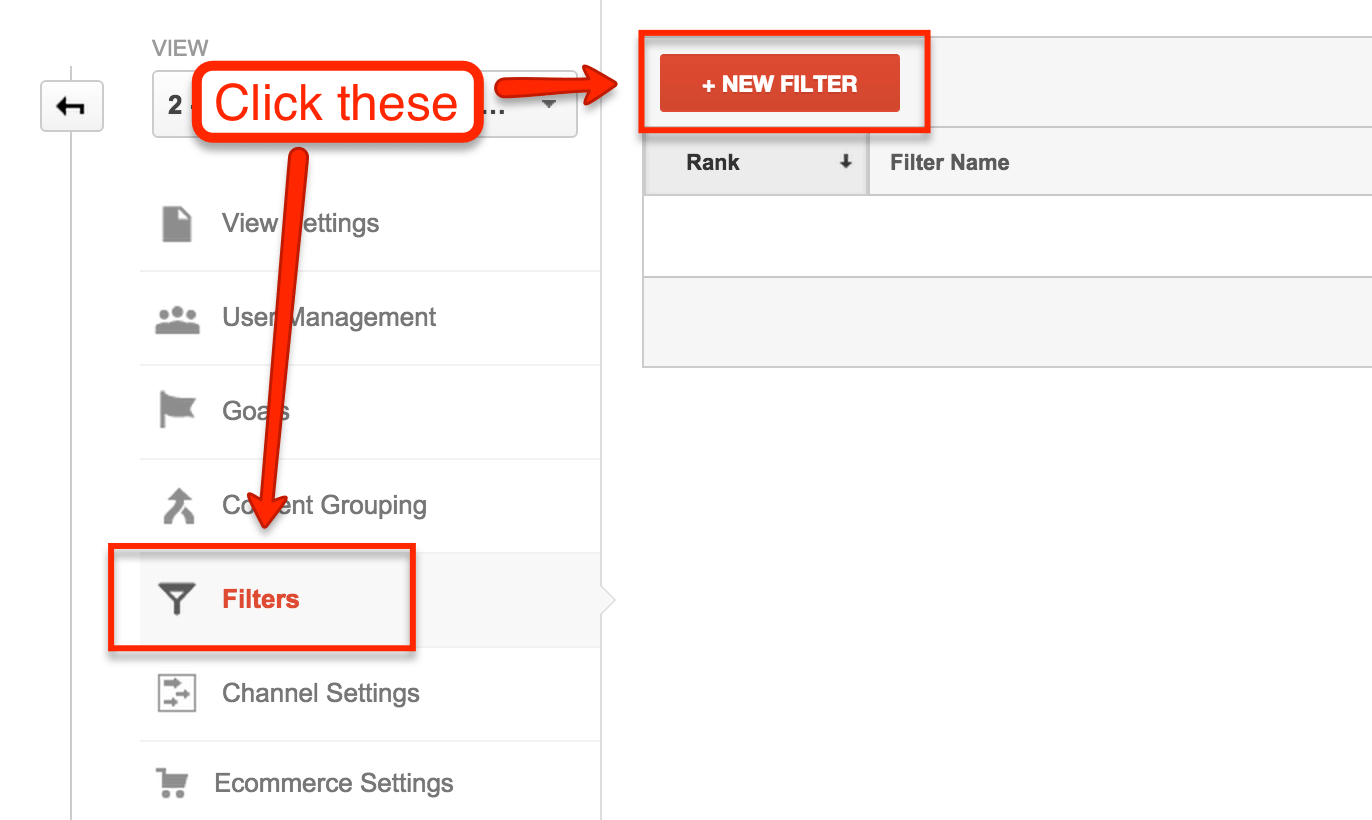

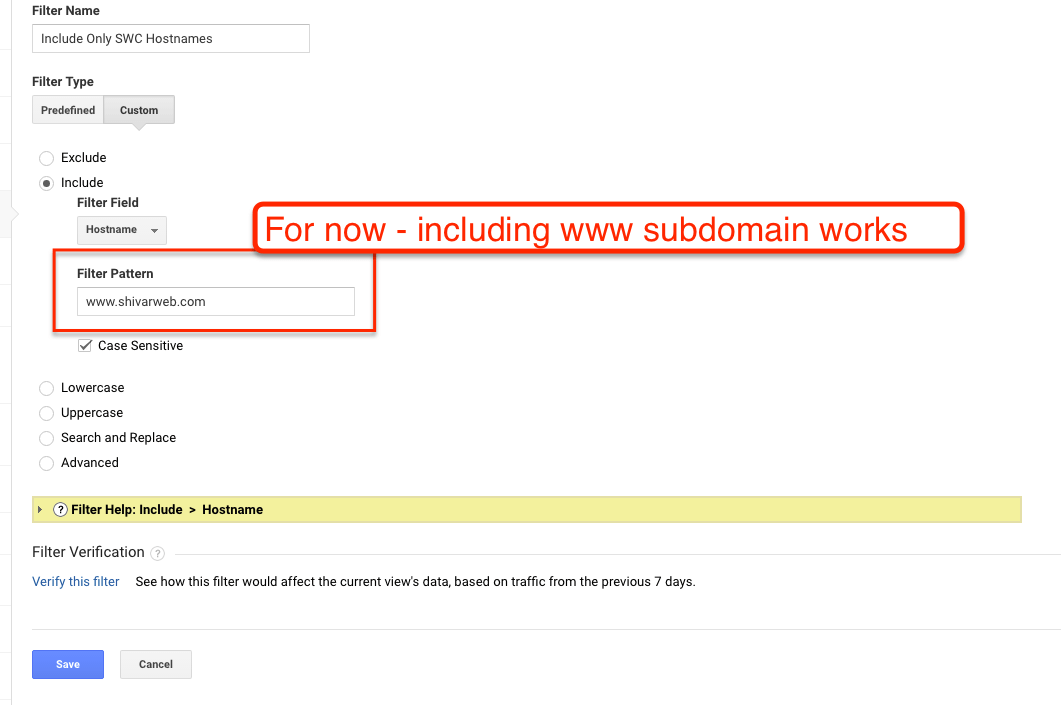

Now go to Admin → Filters in your Bot Exclusion view. Add a new custom filter.

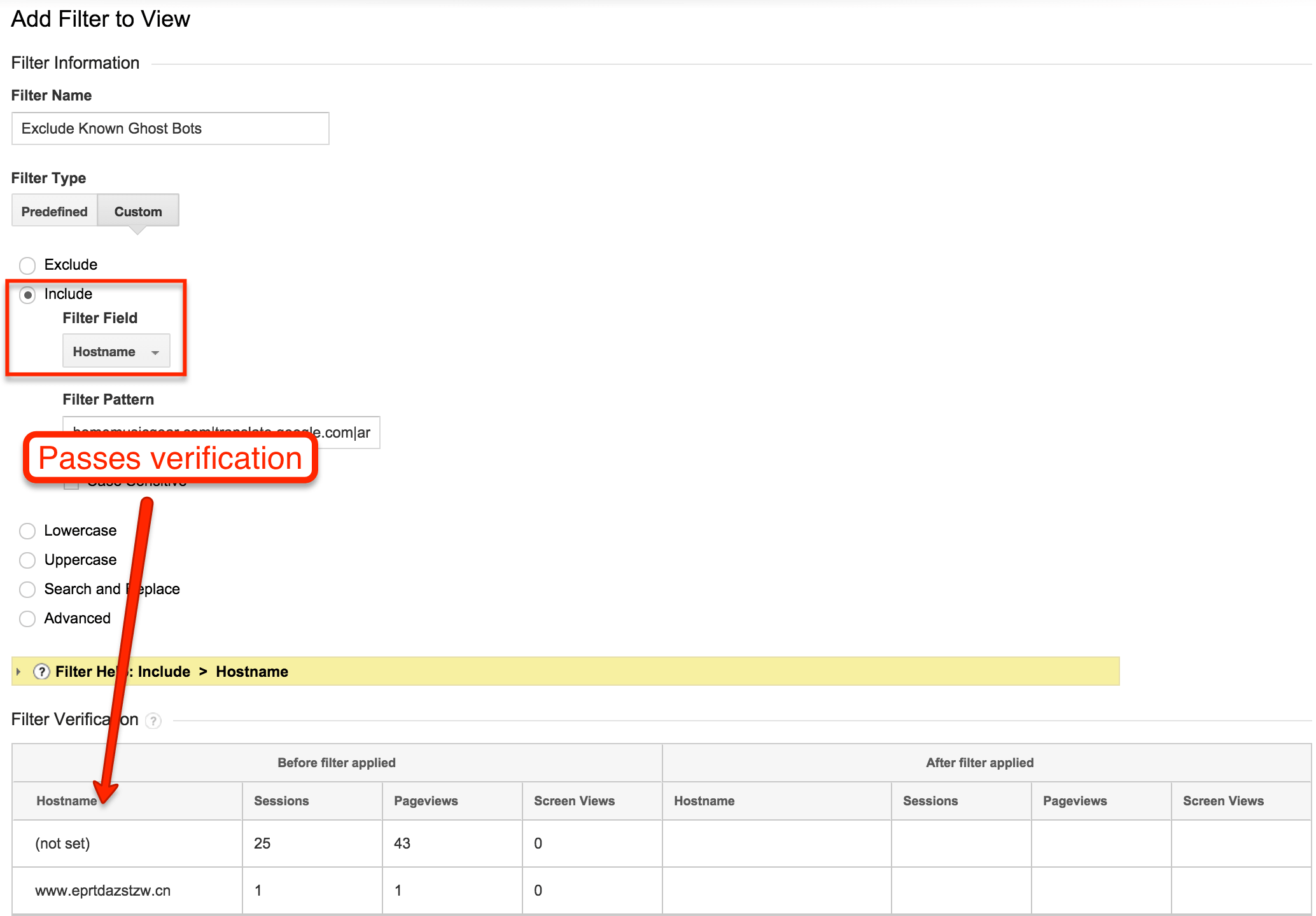

Select Include Only Hostname then add your regex into the field.

Name and save the filter.

This View is now filtering any ghost bots that do not set your domain name as the hostname dimension. It is not 100% – but it adds a major hurdle for many ghost bots. Until November 2016, it was pretty foolproof.

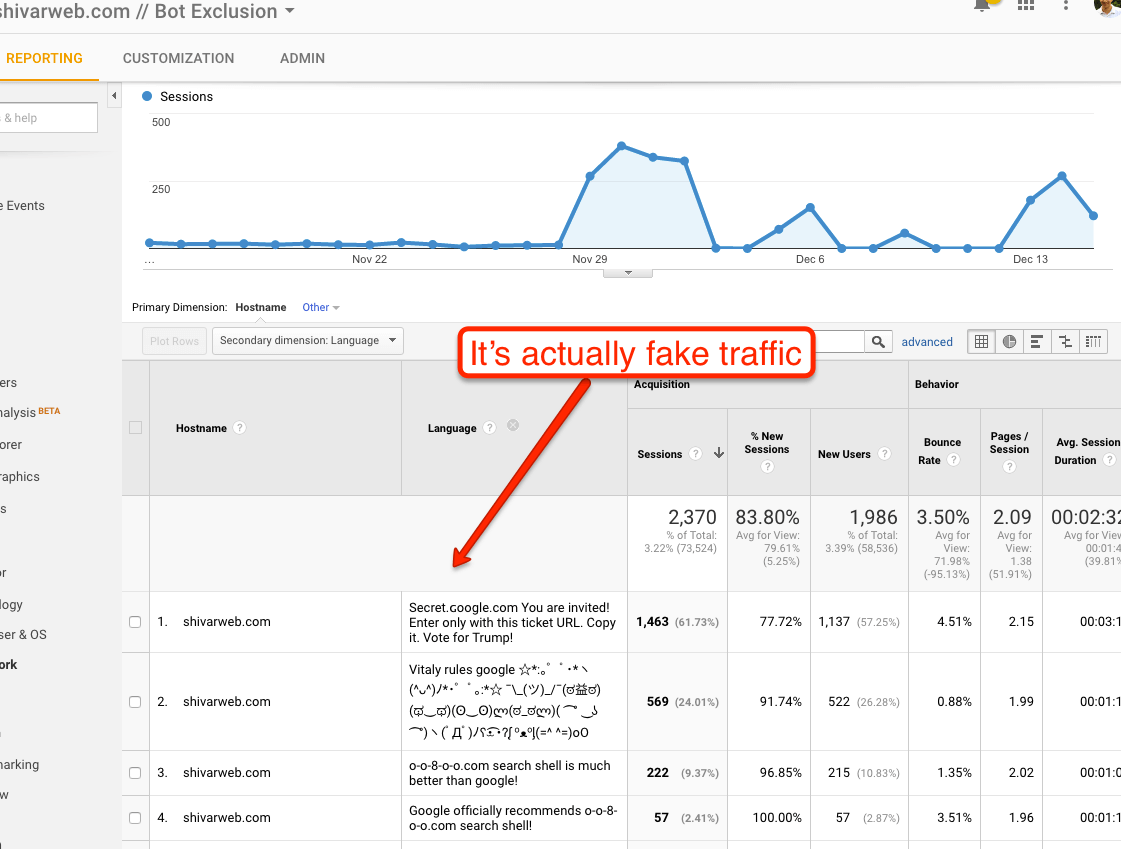

Now – it’s not so much.With the latest round of ghost spam – spammers are now able to spoof even the hostname based on a typical pattern.

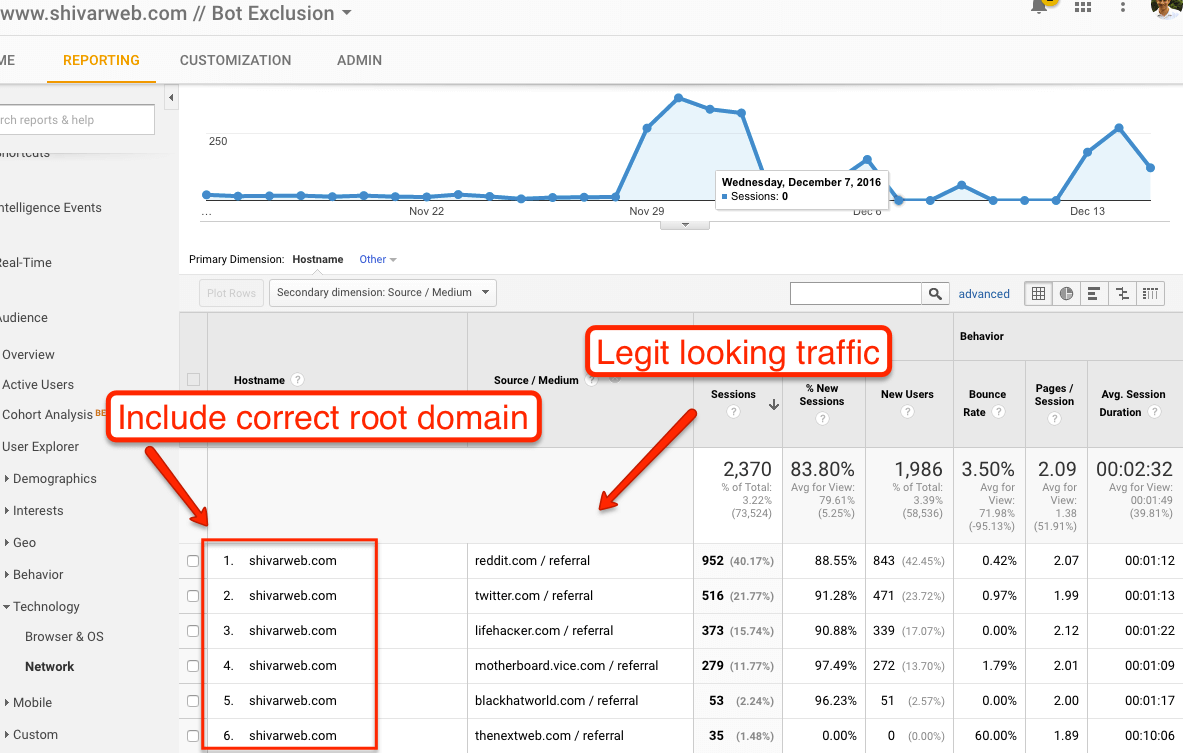

You need to be as specific as possible with this filter. Here’s what November 2016 looks like from one of my Bot Exclusion profiles.

But – my website is on a www subdomain, so my other bot exclusion profile (which is based on an Include Only larryludwig.com) filtered everything out.

Also, note that if you ever start serving content on a new subdomain (ie, new shopping cart or microsite), you’ll need to change the hostname filter.1

You should also actively dig in your Analytics to look for suspicious traffic. The latest round used legit-looking traffic sources…but had very spammy language footprints.

Filtering Zombie Bots

Zombie bots allow you some more options since they actually visit and render your website. If you want to check out server-side solutions, this tutorial by InMotion Hosting solid. Blocking them at your server not only adds a scrubbing layer to your analytics, it can also reduce load on your server resources.

That said, it does need good technical knowledge to not shut down your site or block false positives (aka real humans) from accessing your site. You also have to have resources to keep it maintained.

Here’s how I’ve found to filter zombie bots from analytics without implementing server-side filters.

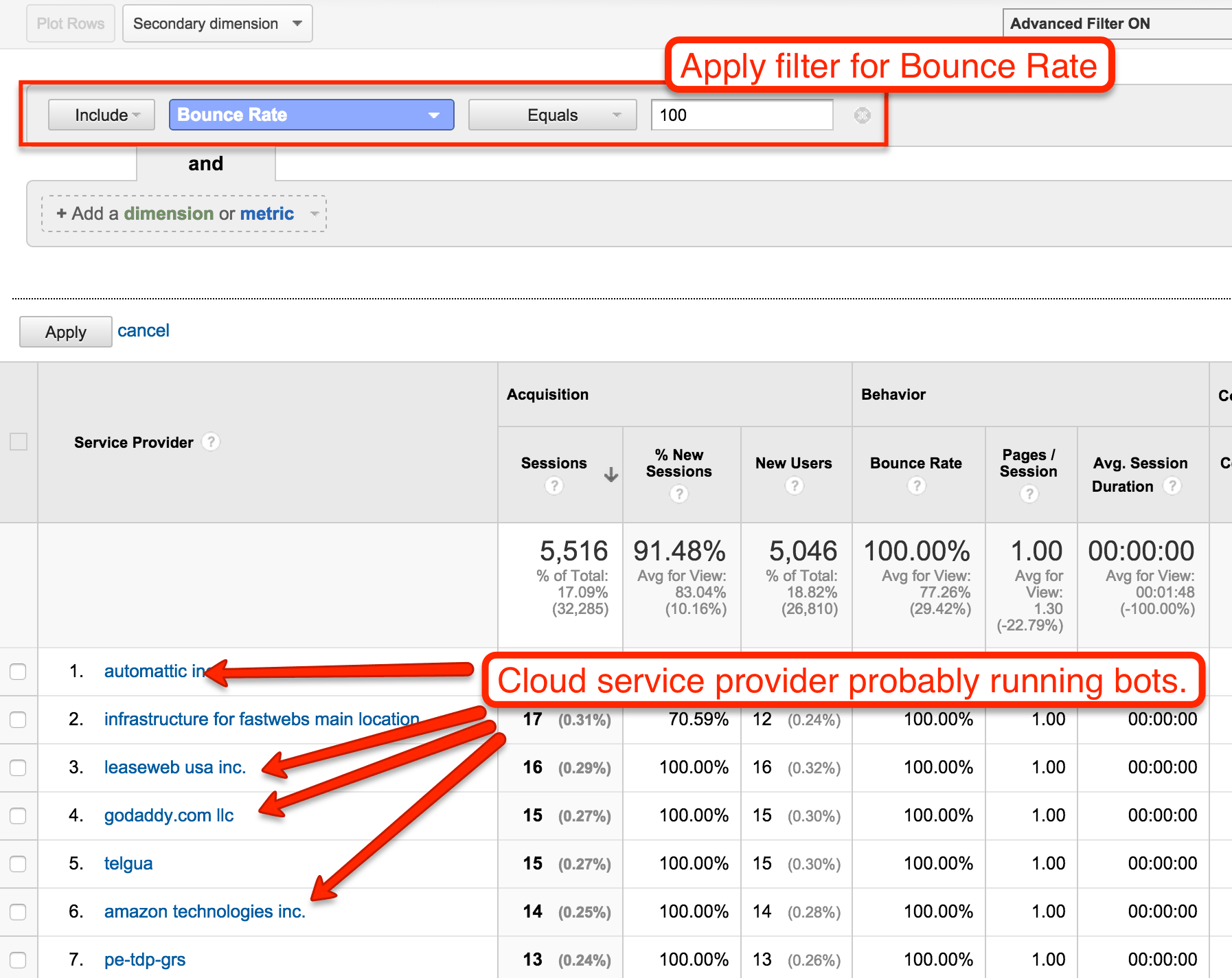

First you need to find a common footprint. Usually the most obvious footprint is under the Network Domain report, which you can find at Audience → Technology → Network Domain. This report details the ISP your visitors are on when visiting your site.

Typical human visitors will be using recognizable retail ISP brands such as Comcast, Verizon, maybe a university or business intranet. Few, if any, humans will be using “cloud service providers” or Tier 1 telecoms as their ISP.

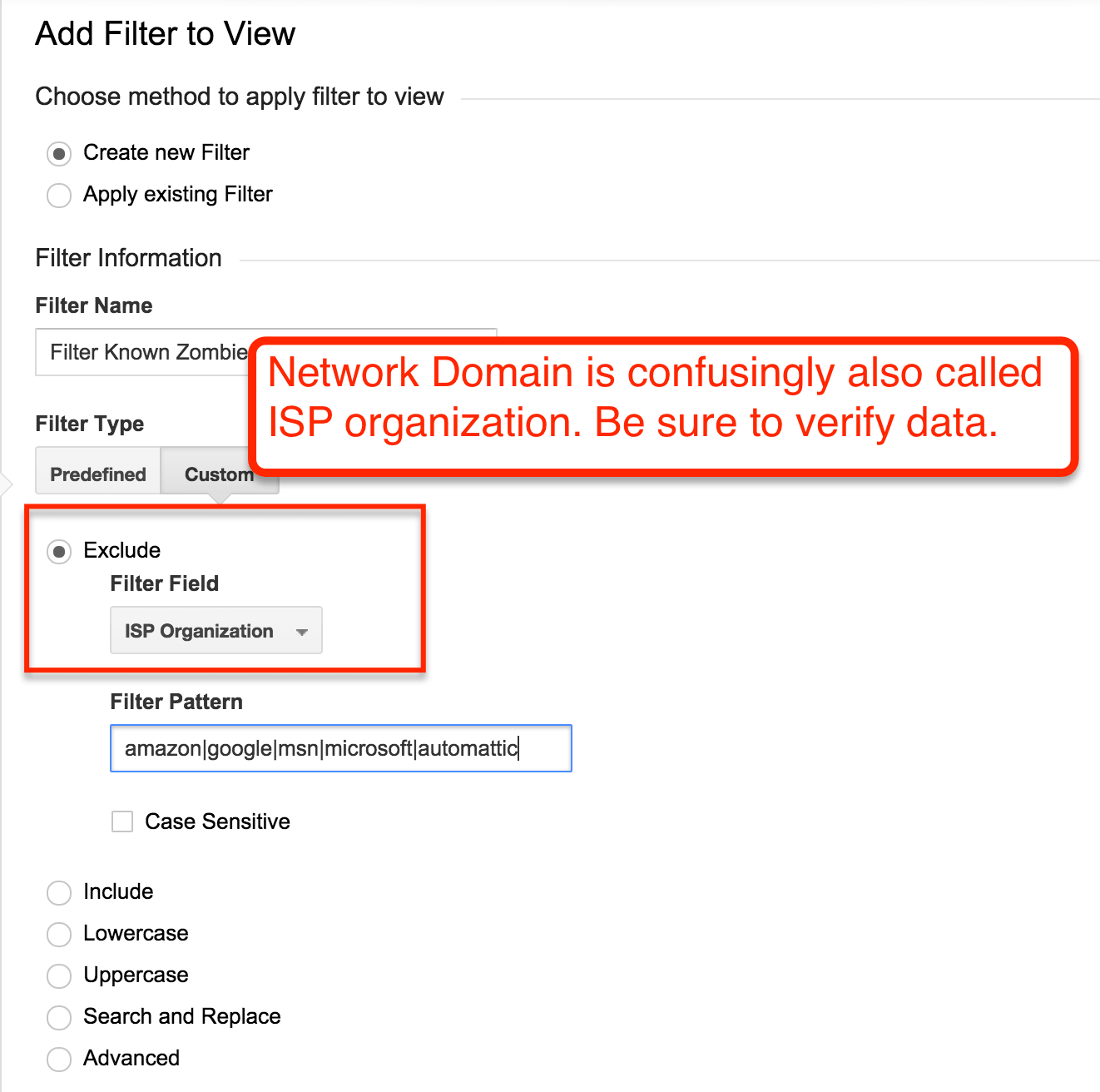

If you sort this report by Bounce Rate, a few should stand out. You should see MSN, Microsoft, Amazon, Google, Level3, etc. You also might see some fake Network Domains such as “Googlebot.com.” Take the ones that have non-existent user engagement and put them in a regex expression such as:

amazon|google|msn|microsoft|automattic

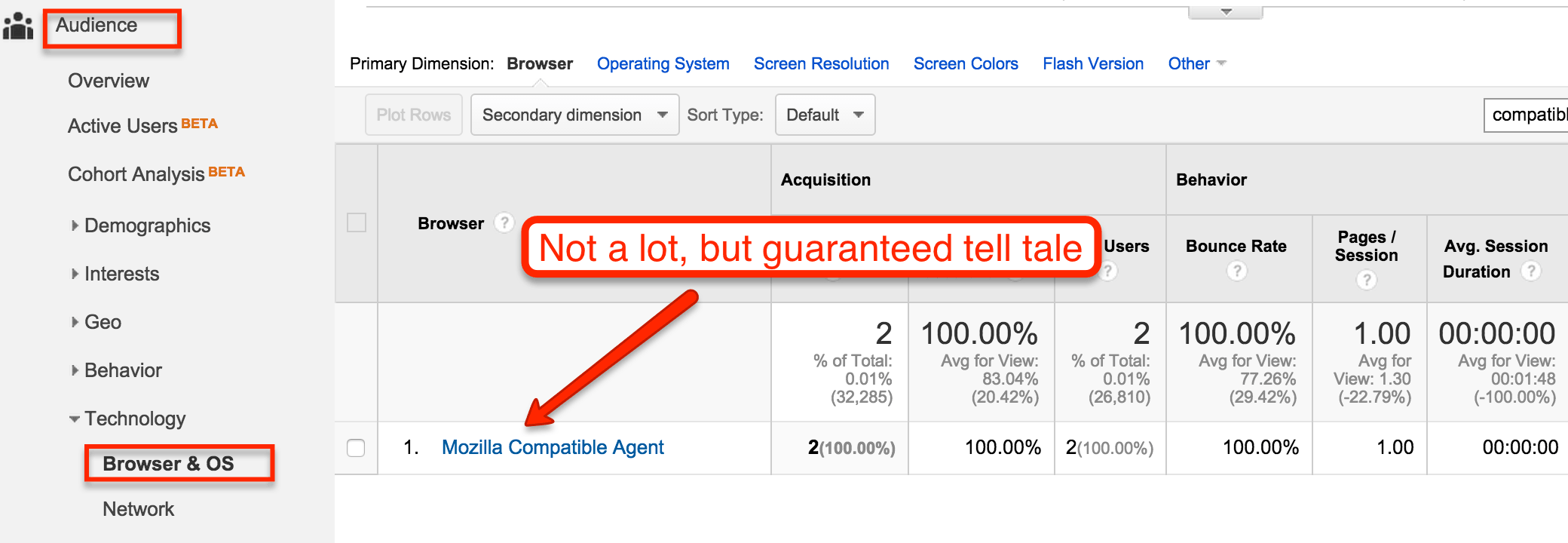

The next footprint you’ll use is under the Browser & OS report, which you can find at Audience → Technology → Browser & OS.

Here you’ll just confirm that you have visits from Mozilla Compatible Agent. These are likely bots. We’ll add them to a filter in a moment.

These first two footprints typically capture the vast majority of zombie bots. But before we add them as a filter, let’s look at how to identify zombie bots that may be hitting your site specifically.

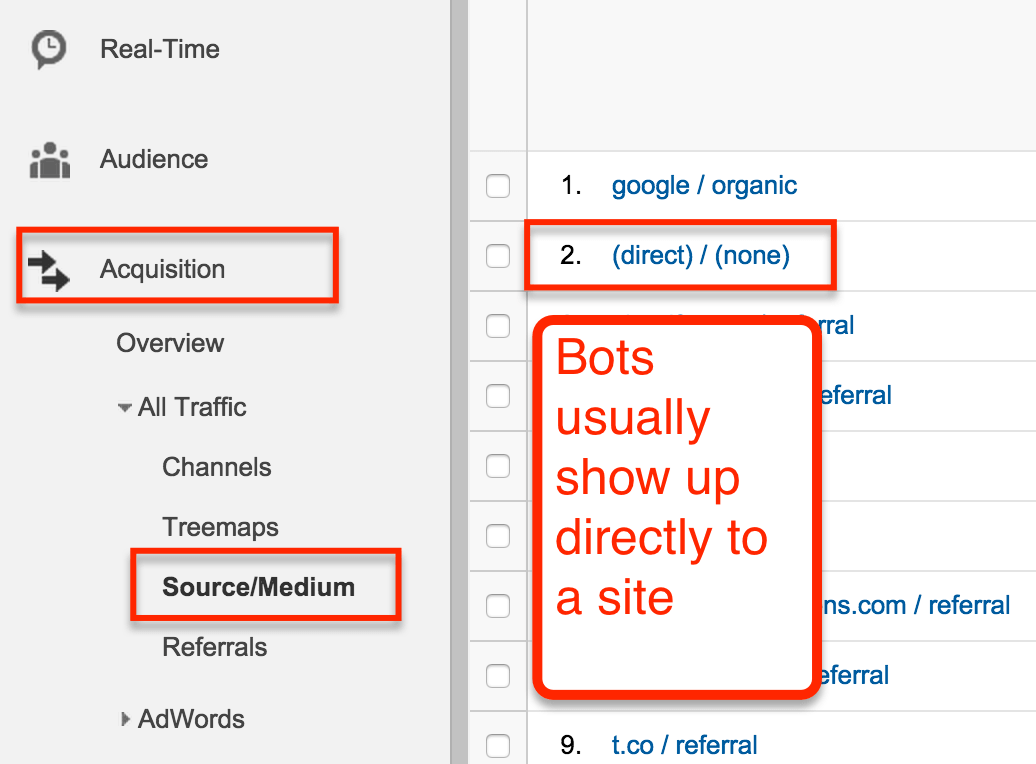

Go to Acquisition → All Traffic → Source/Medium → then look at each medium in turn.

Then add a secondary dimension and cycle through the dimensions under Users and Traffic. If you see a dimension (say Internet Explorer 7) that has engagement metrics, then it might be indicative of a bot.

Look for more footprints. For some zombie bots, there may not be any.

Now we’ll navigate back to to the Admin section and Filters in your Bot Exclusion view.

We’ll repeat the steps for ghost bots, but instead of Hostname, you’ll create two new filters to exclude the Network Domain regex and the Browser/OS regex respectively.

For any more zombie bots, create a new filter based on what you’ve found. For example, you can create new filter to Exclude all Referrals from example.com|example.com and/or any others you can find. Be sure to use the Verify Data feature to check your filter.

Filtering with Advanced Segments

So you have a new view that will filter most all bot traffic moving forward. It will need occasional amending and auditing, but overall it’s set to run on its own.

What if you want to look at historical traffic in your original view?

For that, you’ll need an Advanced Segment that replicates the Filters you put in place.

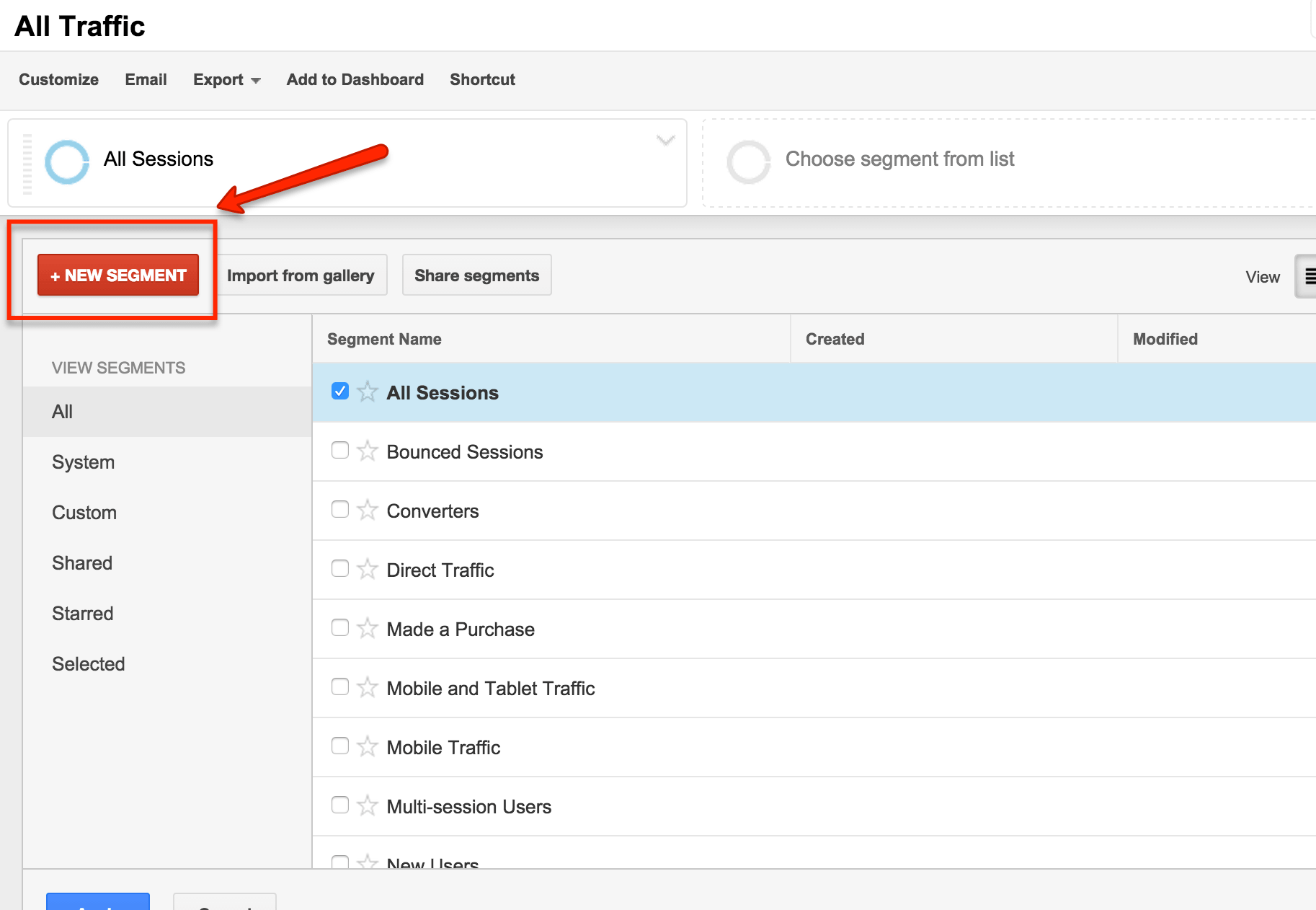

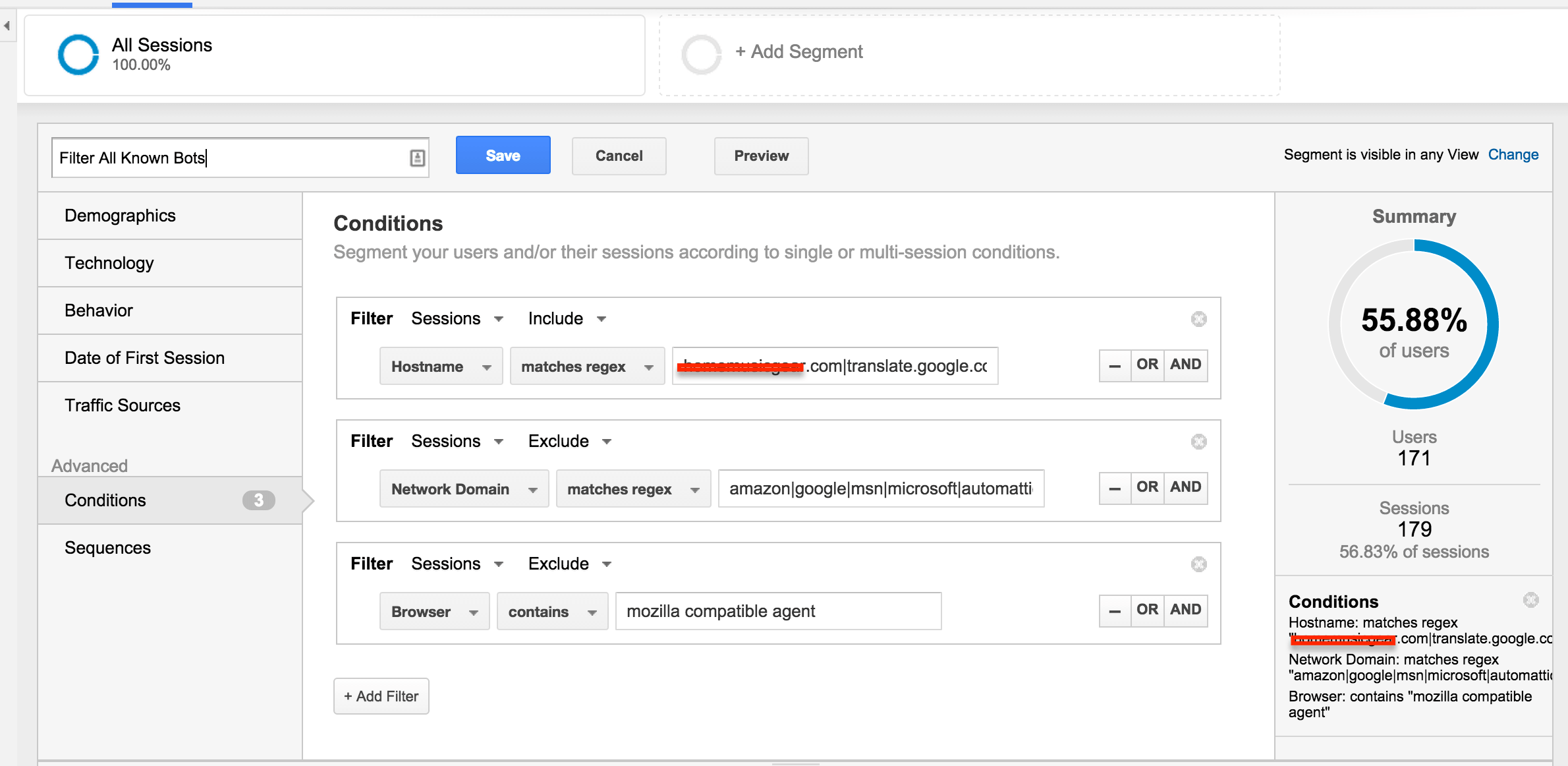

Go to the Reporting dashboard of your original view with historical data. Click on Add a Segment. Click on New Segment. Name it something, ie, “Filter Known Bots”

Click on Advanced → Conditions.

Now, you’ll add the filters that you set up for the new bot view. Be sure to note Include/Exclude. Be sure to use the verification feature on the right to check your filtering.

Save.

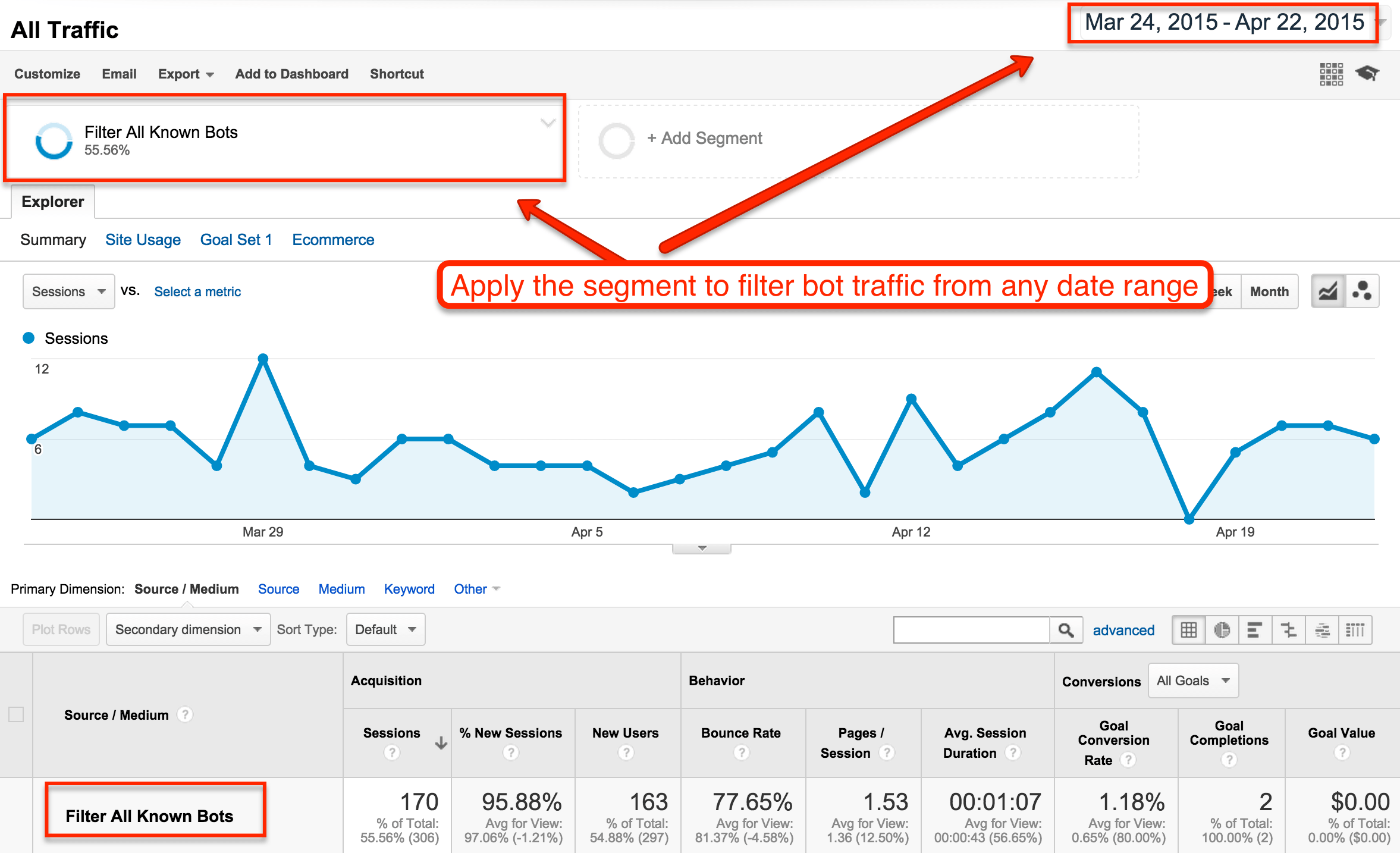

Now, you can select the Advanced Segment on any report. It will automatically filter the bot traffic for the selected date range. This is how you apply the segment to your historical data:

Next Steps

We’re currently at the low-level nuisance, frustrating, maddening stage of spam in Analytics. It’s the point where it happens enough to notice will mess with your data-driven campaigns if you don’t carefully monitor your numbers and to seek out how to posts like this. But not enough for Google, Adobe, and other giants of the web to craft a true solution.

Until the analytics giants create a new solution, we’re stuck creating filters that remove the majority of the bot traffic without capturing false positives.

- Identify to what degree your site is affected by ghost and zombie bots.

- Create a new view dedicated to filtering known bots

- Add filters for ghost bots (Hostname) and zombie bots (Network Domain & Browser)

- In your historical view, create an advanced segment with the same filters so you can filter historical traffic.

- Commit to regular auditing of your analytics. Be skeptical of traffic numbers. Make sure you’re reading the right story.

For further information, check out AnalyticsEdge’s excellent post on the matter. Also check out the Bamboo Chalupa podcast episode on “Why Your Analytics are Bullshit and What To Do About It” and “The Dark Side of Data-Driven: What To Do When Your Data Is Wrong – which is embedded below.

This was exactly what I was looking for. We’ve had spam in our account for awhile, and now we know why. Thank you!

Thanks some great information there, I wrote an article on referral spam too for blocking hostnames, htaccess and the good bots. http://jonnyjordan.com/blog/how-to-stop-referral-spam-in-google-analytics/

Regards,

Jonny

Hi Larry. This is the first time I’m coming across this wonderful blog, and I must say your articles are amazingly informative. By the way, which tool do you use for making shapes and texts on the screenshots.?