Google Search Console (formerly Webmaster Tools) is Google’s suite of tools, data & diagnostics to “help you have a healthy, Google-friendly site.”

It’s the only place to get search engine optimization information about your website directly from Google.

Side note – Bing has a separate but similar tool suite at Bing Webmaster Tools.

Google Search Console (Webmaster Tools) is free. Any website can use it. But, simply installing it will not improve your SEO or your organic traffic. It’s a toolset – which means you have to understand how to use Google Search Console effectively to make any difference on your website.

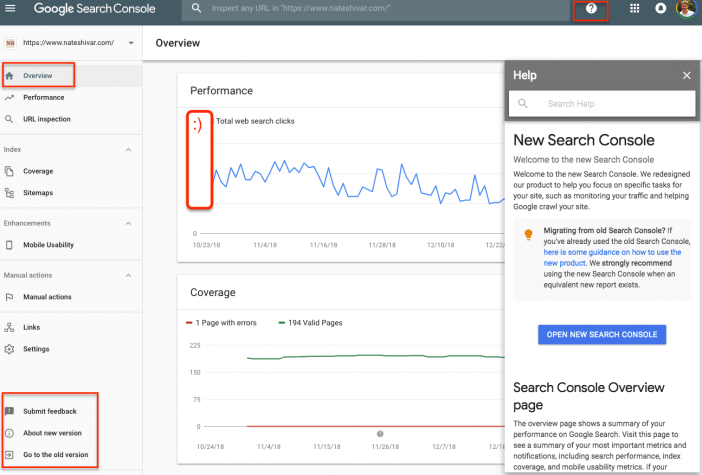

In 2018, Google has launched an all-new Google Search Console. It’s out of Beta and open to the public. If you are used to the old Webmaster Tools, much of the data & structure might be familiar. Many pieces were removed, but overall your access to data is much improved. There is way more data that is way more accessible than the old Webmaster Tools.

That said, it is still a bit daunting to understand. This tutorial will go through what each feature is, what you should be using it for, and some ideas on being creative with it.

Getting Started

To get started – you need a website. You’ll need to link your site to Search Console then take care of a few settings.

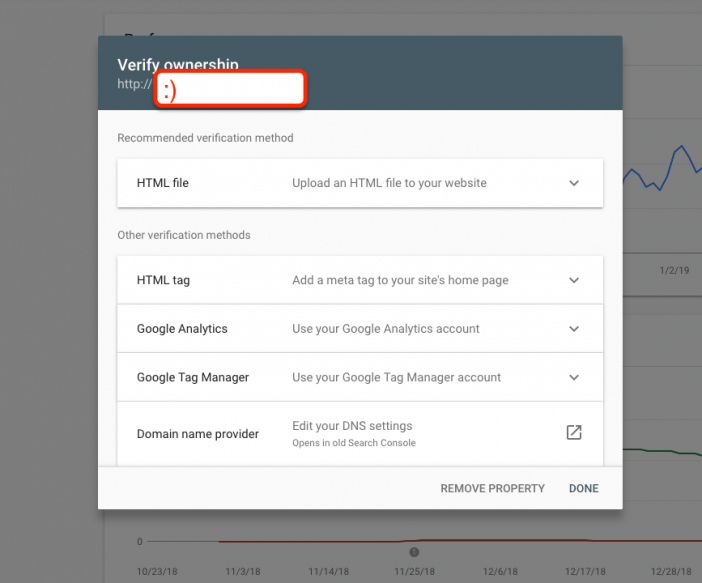

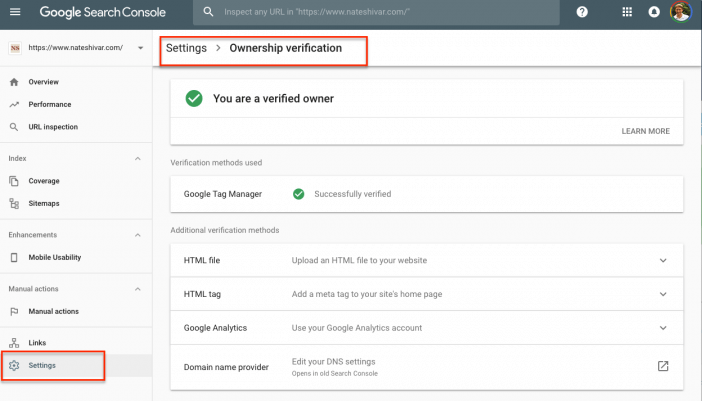

Verification

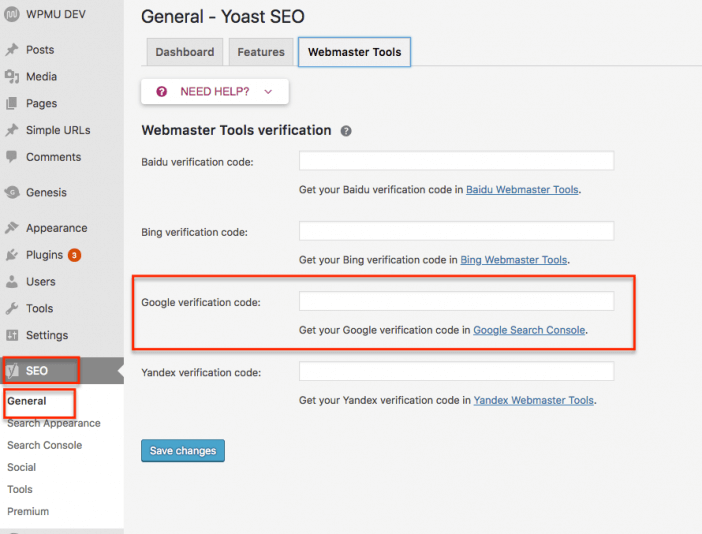

There are plenty of ways to verify your Search Console account. I prefer verifying via Google Analytics since it reduces the number of files / tags to maintain.

If you are using WordPress, the Yoast SEO plugin makes it simple. Though keep in mind that you need to keep that plugin active to maintain the verification.

When you are verifying your account, remember that Search Console treats all subdomains and protocols as different properties.

That means that any change from HTTP to HTTPS represents a different website. Any change from a WWW subdomain to no subdomain is different. Your data will be wrong if the property that you have verified with Google Search Console differs from the website that Google is serving in the search results (SERPs).

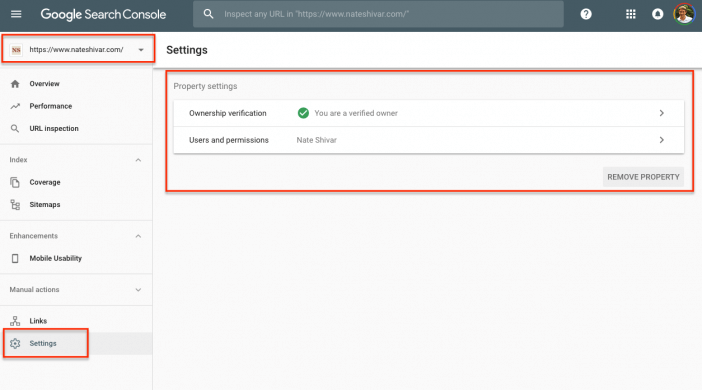

Understanding Settings

In the new Search Console, the settings are simplified and fairly straightforward. You get to maintain your ownership verification and manage users if needed.

Under ownership verification, you can actually change your verification method (ie, if you move plugins or DNS provider).

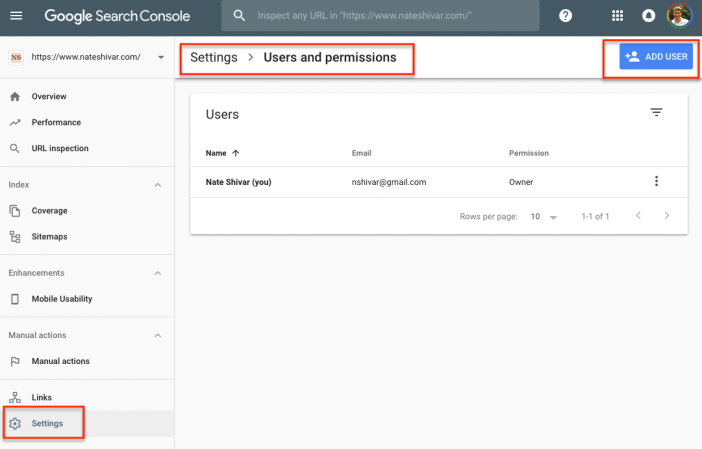

If you need to grant someone access, head to Users & Permissions. You can not only add users but also determine what they can and cannot do. If you are a DIYer or website owner evaluating an agency or consultant, this section is where you’ll go.

Like other sections in Search Console, the interface is clean and straightforward…once you realize that most things are clickable and sortable. The upside down triangle is the sort & filter button and you can click on most sections of information.

Enhancements & URL Inspection

The primary purpose of Search Console is to help you improve your website content to help Google’s users. In exchange, your website will be more likely to do well in Google Search. The Enhancements tab and URL Inspection tool both help you address how Google & other users “see” your website.

In a world where everyone uses their own browser, device, and custom settings – you might have website issues that you simply don’t know about. This section is where you start, though we’ll come back to technical troubleshooting in the Coverage section.

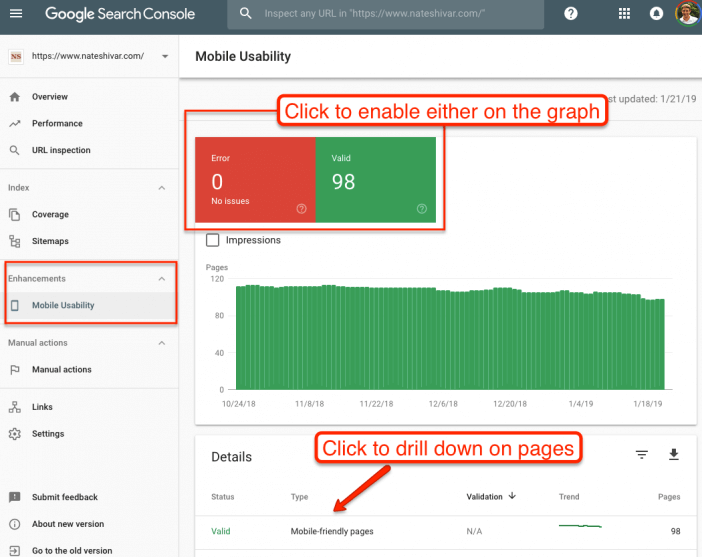

Mobile Usability

Google has stated that it intends to demote sites that aren’t mobile-friendly in mobile search results. In a way, it’s a side-issue to the fact that users hate websites that don’t work well on their devices.

If Googlebot finds any common usability errors, you’ll find them here. You should fix them with medium priority speed. Most errors here are not website-killers, but are more like weights that will slowly drag your site down.

And keep in mind that just because your site “has no errors” does not mean that it is mobile-friendly for users.

Before looking at these elements, don’t overlook Google’s handy pop-up glossary. It outlines the different SERP elements along with how to influence each one.

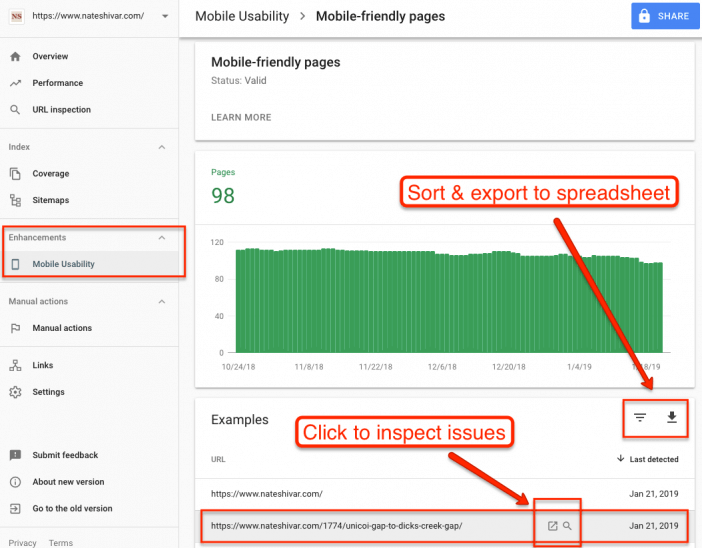

Google will tell you how many pages it has validated – which is a very different number than what appears in their index (which I’ll cover in a later section). However, the pages usually represent a large enough sample to diagnose any issues. Click through to get to individual pages or errors.

Once you’ve found a page with errors, you can use the URL inspection tool to figure out what’s going on.

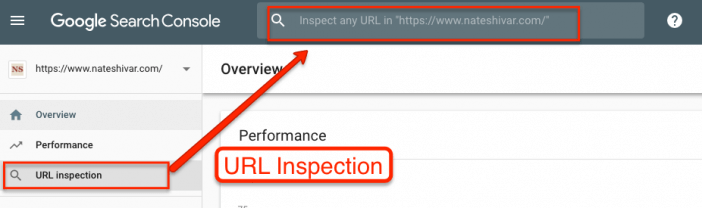

URL Inspection

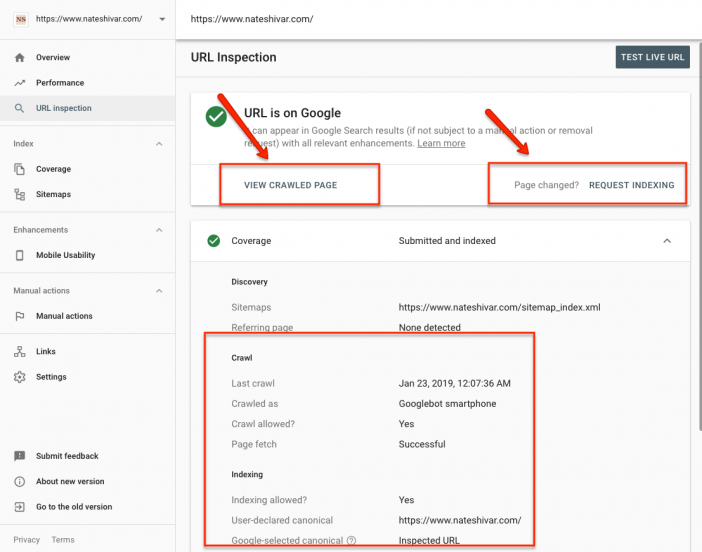

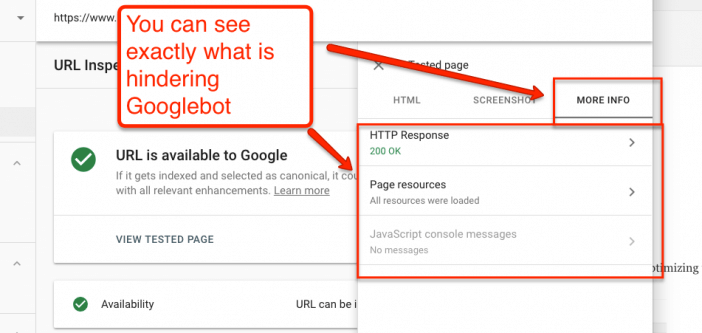

The URL Inspection tool is a more-useful version of the old Fetch As Google tool. You can drop any URL on your site into the tool to get a detailed report on what Googlebot sees, understands, and handles with the page.

The URL Inspection tool also has the old Submit to Google tool. If your page has changed significantly, you can request faster indexing. Otherwise, the page has *incredibly* detailed and useful information for how Googlebot has crawled & indexed your page.

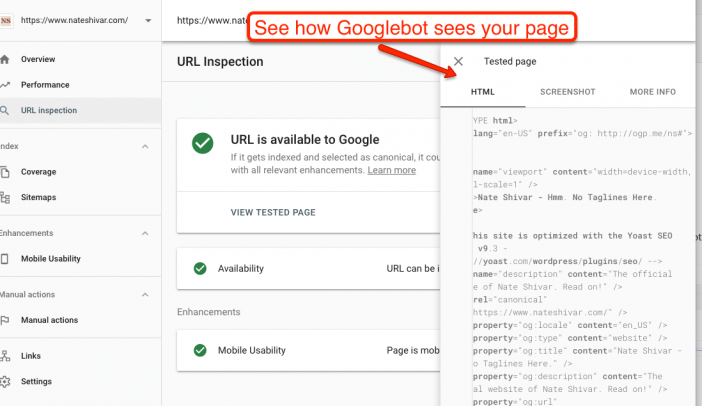

You can audit how Googlebot pulls the actual HTML to see if your website throws off any errors (ie, blocking Javascript).

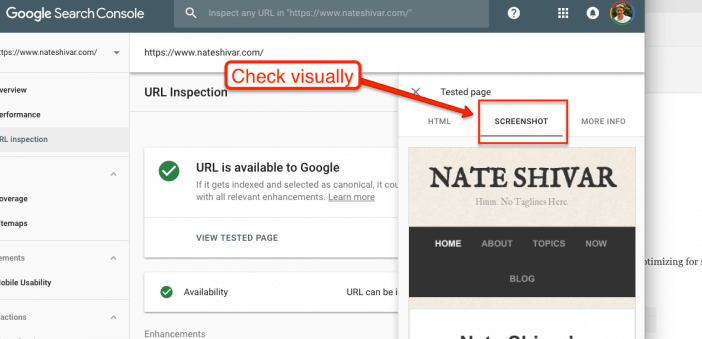

You can make sure that Googlebot renders your site visually in the same way that a human’s browser would render it.

And critically, you can see what resources that Googlebot is getting hung up on. This information can dramatically speed up your troubleshooting. For example, if Googlebot is having a hard time downloading your CSS files, it will show up here.

Now, the URL Inspection tool is amazing. The only issue is that it doesn’t really do bulk processing. You still need to know what to look for. And for that information, we’ll need to look at Performance and Coverage.

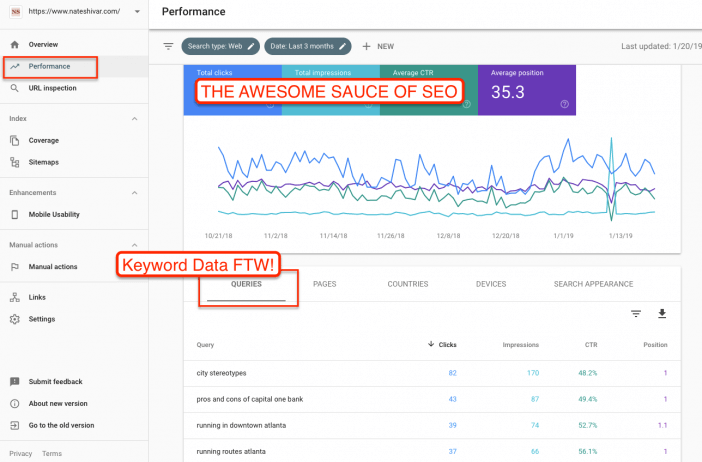

Performance

The Performance section is the most relevant day to day section of Search Console. It’s where you’ll get the most useful data for optimizing for search & increasing organic traffic.

The Performance section (formerly Search Analytics) is a recent addition to Search Console. It replaced the old (and much derided) “search queries” report. Search Analytics will tell you a lot of useful data about how your website performs in Google Search. Before we break down how to manipulate and use the data, there are a few definitions to look at – directly from Google. Google has an expanded breakdown of definitions here.

Queries – The keywords that users searched for in Google Search.

Clicks – Count of clicks from a Google search results page that landed the user on your property. Note that clicks do not equal organic sessions in Google Analytics.

Impressions – How many links to your site a user saw on Google search results, even if the link was not scrolled into view. However, if a user views only page 1 and the link is on page 2, the impression is not counted.

CTR – Click-through rate: the click count divided by the impression count. If a row of data has no impressions, the CTR will be shown as a dash (-) because CTR would be division by zero.

Position – The average position of the topmost result from your site. So, for example, if your site has three results at positions 2, 4, and 6, the position is reported as 2. If a second query returned results at positions 3, 5, and 9, your average position would be (2 + 3)/2 = 2.5. If a row of data has no impressions, the position will be shown as a dash (-), because the position doesn’t exist.

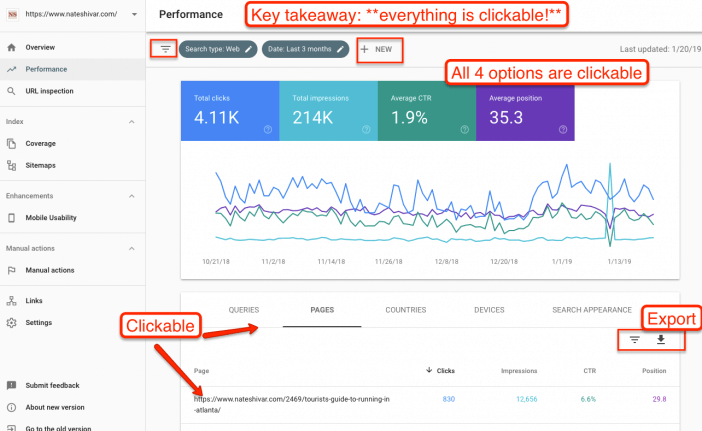

To effectively use the Search Console report, you need to change the groupings to find data that you are looking for. Remember that you can change groupings after you apply a filter (ie, you can look at Queries after you have filtered for a page). Also – please note that you can export all the data to a spreadsheet if you are looking at a large groupings.

The number one tip I have is – have a hypothesis and click, sort & drill down as needed. You can click, sort and filter almost everything in this report. You have 16 months worth of data. It’s a goldmine that’s easy to get lost in. I have a sequel post focused only on Performance here, but for now, here are my 2 favorite pieces of data to pull.

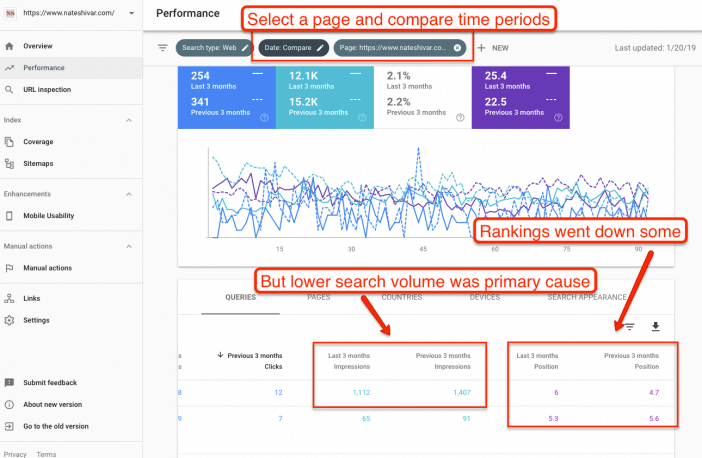

Diagnose Why A Page Is Losing Traffic

- Check all metrics boxes

- Filter by page, select a date range

- Click to Queries, Countries, Devices looking for a culprit

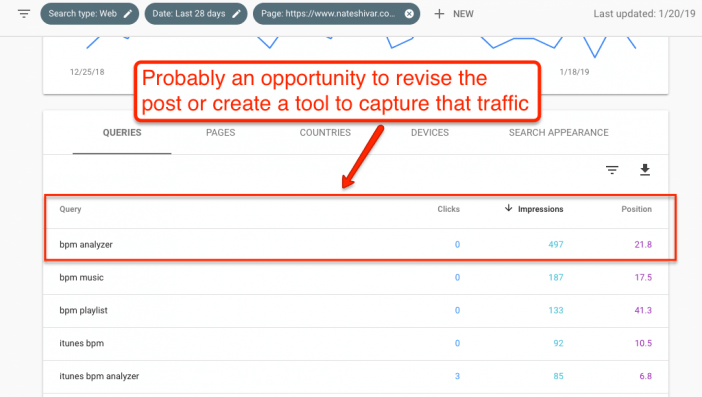

Look For New / Revised Content Ideas

- Check all metrics boxes

- Filter by page

- Click to Queries

- Sort by Impressions

- Look for queries that are not directly related to the page, but where the page is still ranking

- Use this data to either revise the content to address that query OR create a new page targeting that query

Here’s a brief video showing how I think through the different tabs. They have slightly changed the design, but the data, tabs and process are all the same.

Aside – I have an entire post on 15+ ways to use Search Analytics effectively here.

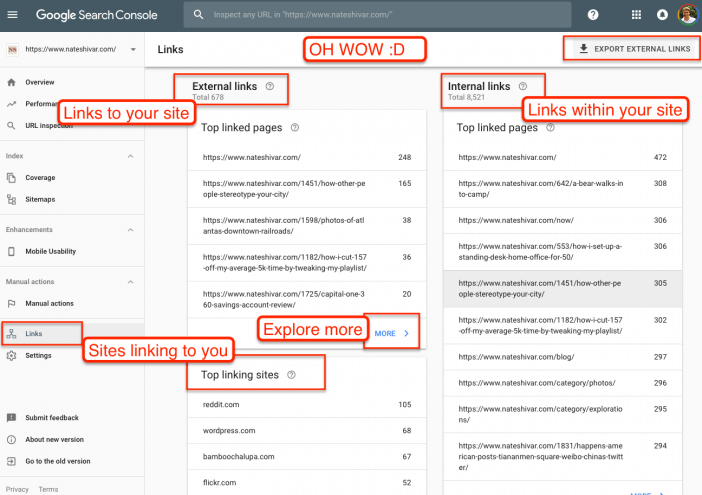

Links

Links have always been (and will always be) critical for success on Google organic search results. But links are still the hardest piece of SEO to understand, build and optimize. And lack of data plays a large part.

The new Search Console provides much more link data than the old Webmaster Tools. They are more accessible, and possibly more complete than ever.

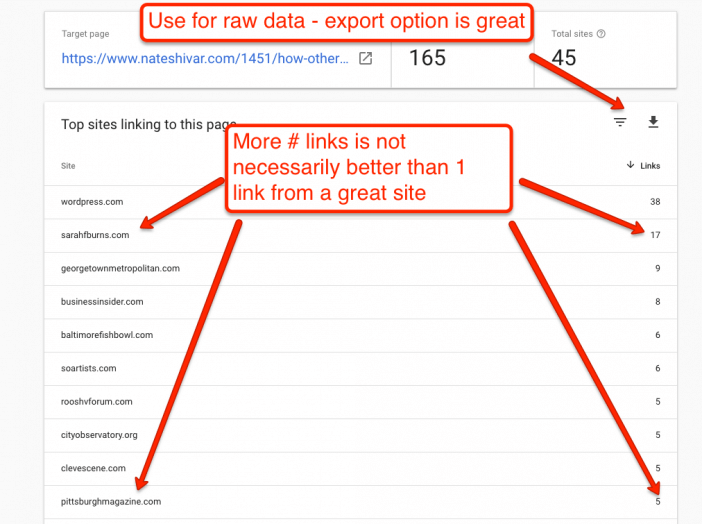

However, be careful analyzing these reports. Google’s new reports have a heavy bias towards link quantity over link quality. And link quality is where you can truly win.

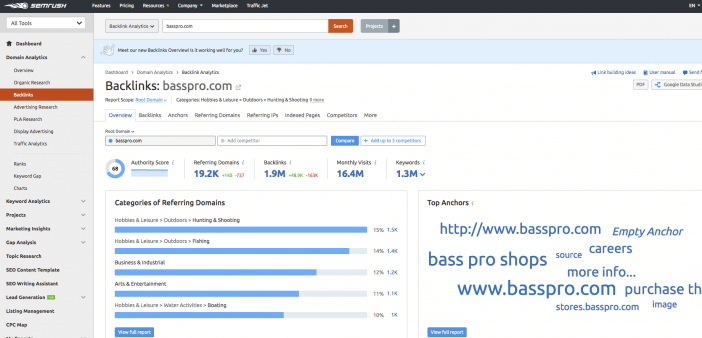

If you are serious about SEO, then I don’t think you can do without premium tools like Ahrefs.

However, Search Console link data is not only extensive, but it’s free and easy to download.

Understand and analyze your Search Console link data, but be sure to download it to a spreadsheet and combine it with Ahrefs’ data.

Here’s what each report means.

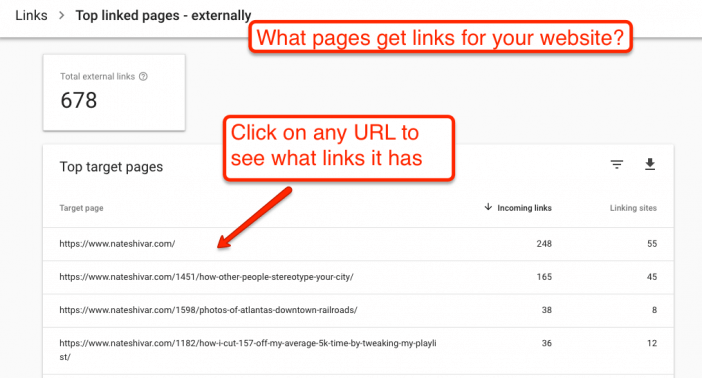

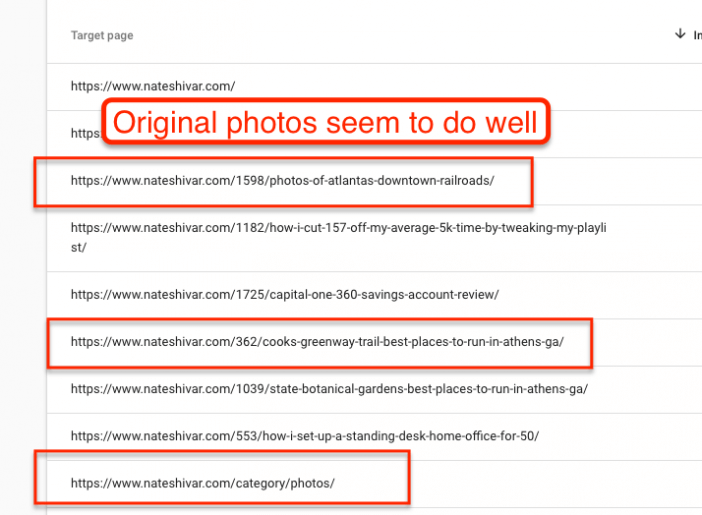

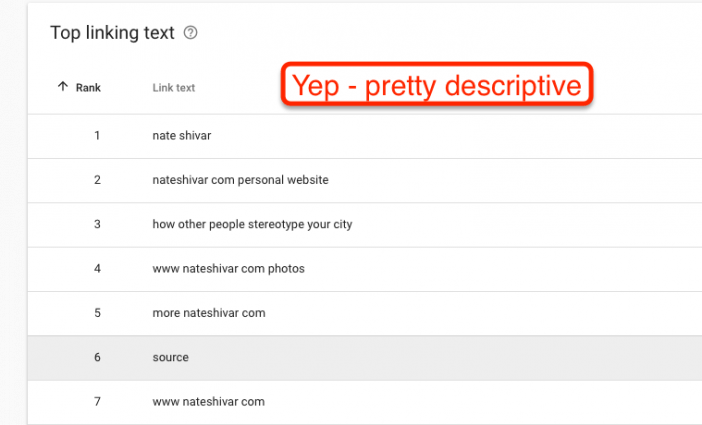

External Links

Google understands the Web via links. They are still the primary factor in Google’s algorithm. This section helps you understand who links to you, what content gets linked most, and what anchor text other sites use to link to you.

There are three items to remember when looking at this section.

First, Google doesn’t give you all its link data. Like I’ve already mentioned, most professional SEOs & site owners with a budget will use SEMrush’s Backlink Tool to pull more useful & qualitative data (if you don’t have much budget – try LinkMiner or SEMrush’s free trial).

Second, there’s no way to know how Google uses this data for any given query. Don’t get too focused on any single link or string of anchor text. Instead, use it for big picture marketing ideas & problem diagnosis.

Third, there is more data in this section than you’d expect. The key is to just keep clicking to find out more.

Here’s what you should do with your Links Report.

First, use it to understand what type of content gets links. You can use that data to do more of what is working.

Be sure to use the export function so that you can manually “tag” quality links and pair it with SEO tool data.

Second, use it to understand what types of sites link to you. Use that data to find similar sites for content promotion. You can also use it to understand just how much of the web is spam.

Third, look at your anchor text to make sure it’s telling generally the right “story.” Look for spam terms that will let you know if your site has been hacked.

For many website owners, it’s enough to survey your links and keep doing what you are doing. The Search Console interface is great at that.

But it’s also an underestimated advanced tool to pair with data from 3rd party SEO tools.

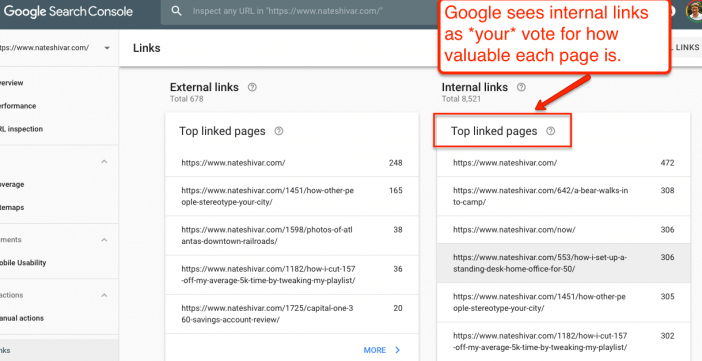

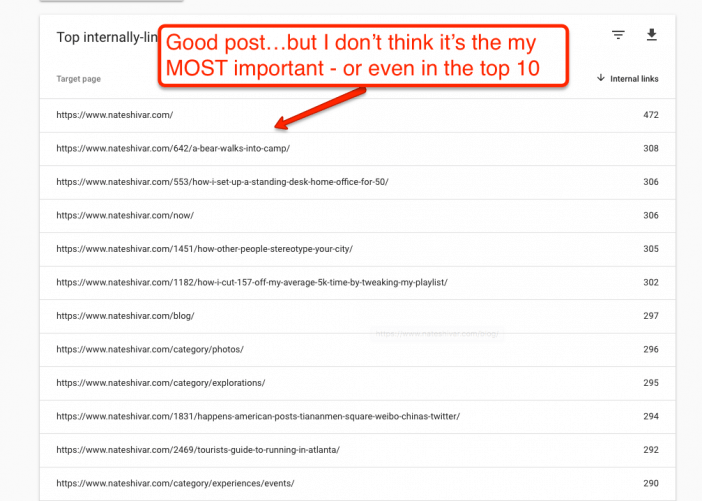

Internal Links

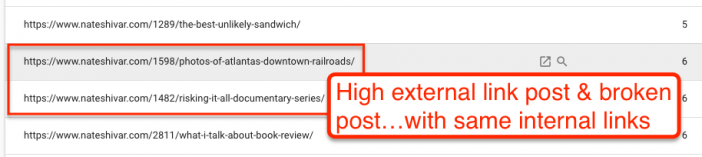

This section lets you understand the links within your site, and how Google is crawling your site. This will differ from a crawl by Screaming Frog since this shows exactly how the Googlebot has been crawling your site.

You should be using this report to look for mostly one thing – outliers.

Sort the list by most links & by fewest links. See if there are pages that are linked to much more than others. See if there are pages that should be linked to more often…but aren’t.

Pages getting crawled more does not equal higher rankings. But, links do pass critical information to the Googlebot through both anchor text and link context.

If you have underperforming content, it might underperforming because your internal links aren’t painting the right picture for Googlebot. This issue is common in blogs where old content receives more internal links because it has been around for longer (not because it is more relevant).

If you see pages that have more links than they deserve – they might just be in a stale category or tag page. Based on that, you should revise your category structure to coax Googlebot into crawling deeper, more relevant pages.

Internal links can be overdone, but they are also the simplest links to build. The Internal Links report can help you do that.

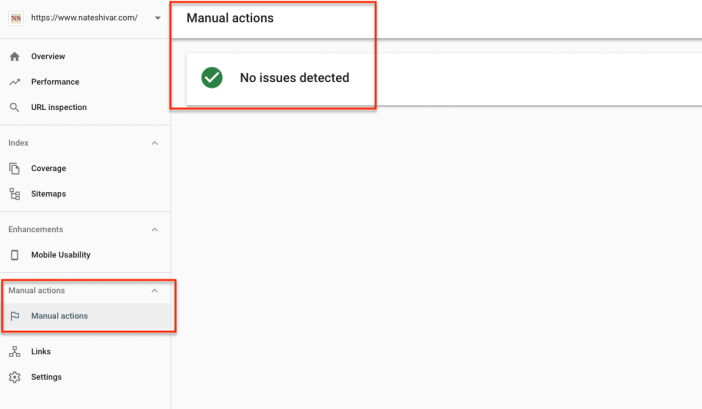

Manual Actions

Google uses a combination of rewards, threats, announcements and manual team reviews to trigger webmaster behavior that leads to better, cleaner signals for the Googlebot.

If a Web Spam team member finds “unnatural” marketing or website behavior, they will let you know about it in this section.

If you get a message, take action immediately.

Index

Google stores copies of your website on its servers. They use the copies of your website on their server to analyze & serve search results.

That means that understanding what those copies look like and how Google obtains them is essential to performing well on Google Search.

If your live website looks great, but Google’s copy looks like crap – then you will never rank well for any otherwise relevant search queries.

This report will help you figure out exactly what Google has & if it aligns with your actual website.

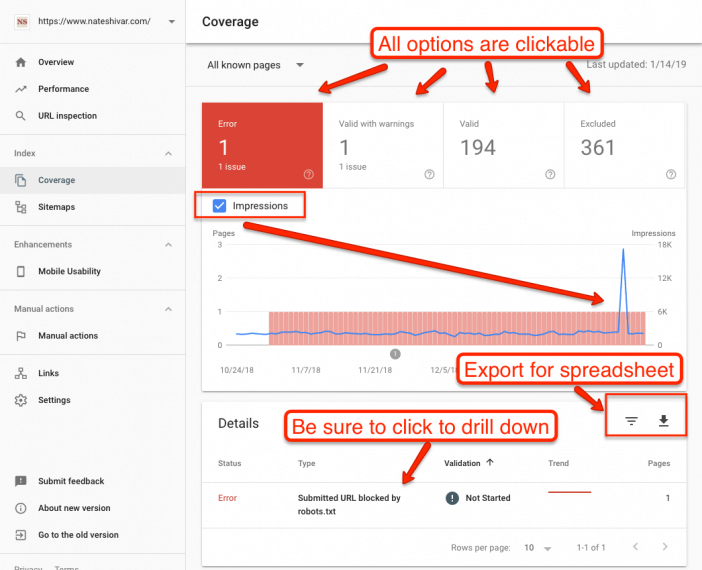

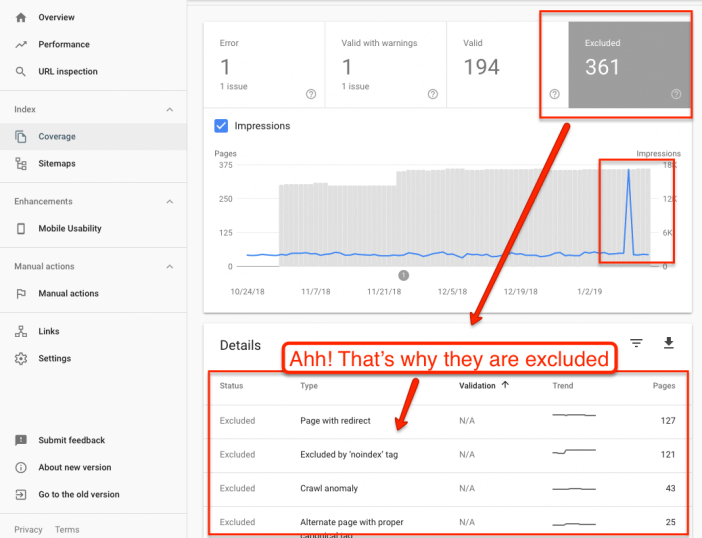

Coverage

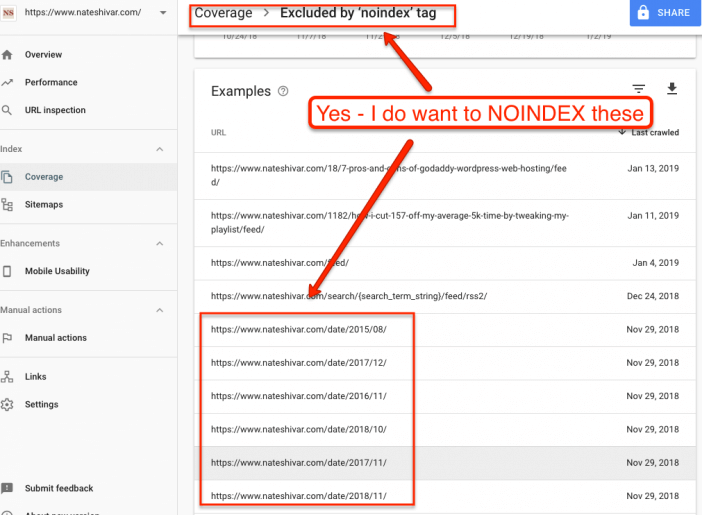

For a URL to appear in Google’s search results, Google has to have a copy of that page “indexed” on Google’s servers.

If a page is not in Google’s index, then it will not get organic traffic from Google.

You can use this report to make sure that all the pages that you want Google to index are in fact indexed.

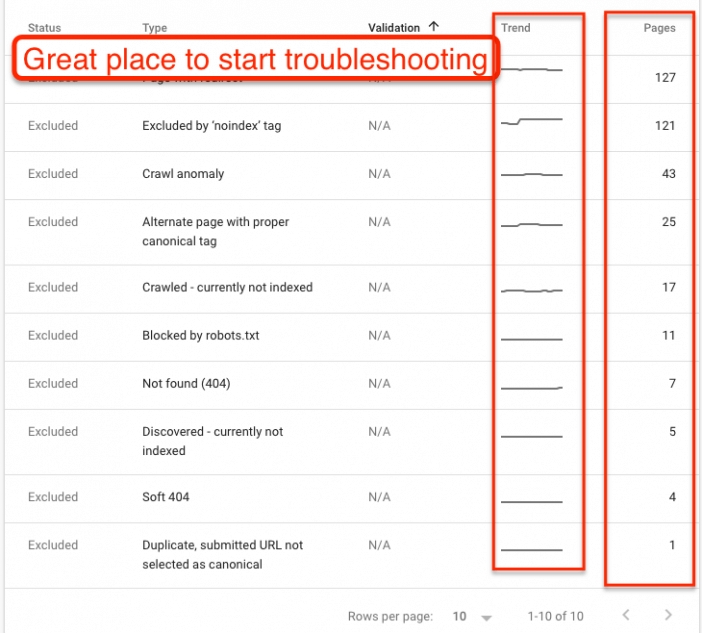

Like the Performance report, it’s easy to drown in data unless you have a hypothesis or a problem to solve.

Form a hypothesis (“some good pages aren’t performing well because they are wrongly excluded”) and click to drill down and find answers.

The new Search Console provides detailed reporting on errors. It will also provide exact page URLs and trends to help you pair errors to recent site changes.

Note that you can also use the data to confirm a positive hypothesis (ie, “my new plugin should help me exclude low quality pages from the index”).

The Coverage report area is worthy of a separate post because there is so much data. But that’s a good problem to have. Do not be afraid to click down and explore. But also don’t panic with jargon & language. Googlebot never has been perfect (and likely never will be) so it’s going to do some weird stuff. The heart of search engine optimization is understanding what Googlebot is doing – and how you can help it help you.

The Coverage report is a great place to start.

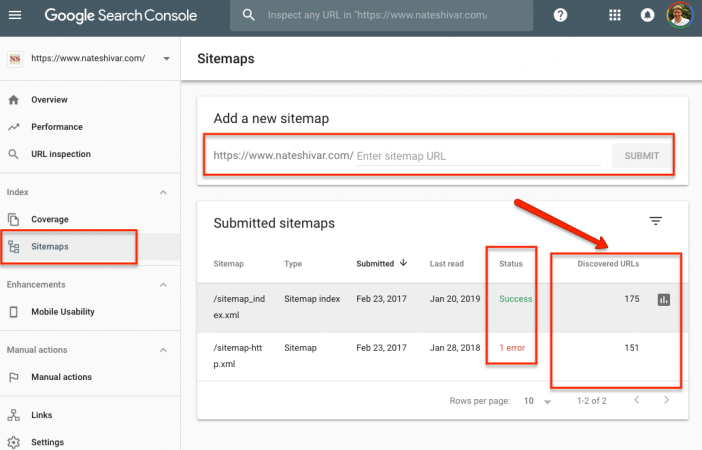

Sitemaps

Search engines use sitemaps to complement their crawl of your website. Think of them as a “map” of your website. As Googlebot crawls your site, it will also look at your sitemap for guidance – and vice versa.

Sitemaps have to be in XML filetype. XML Sitemaps must have few or no errors – otherwise, Googlebot will start to ignore it (though it’s generally not as ruthless as Bingbot).

Find errors that need to be fixed. Use it to reverse engineer pages that are not getting indexed.

Other Resources

Google has many other resources dedicated to specific issues.

Their help section is quite extensive. And even though Search Console is out of Beta, they are still adding, tweaking, and porting features. Be sure to submit feedback if you see something that is either confusing or simply wrong.

Next Steps

No matter how small your website is – you need to have a verified Google Search Console account. Make sure all versions of your website are in place.

After that, remember that Google Search Console is a tool. It doesn’t do anything on its own for your website. Start learning how to use it, what the data means, and implement website changes to keep improving your website & marketing.

Editor’s Note – I wrote this post for DIYers and non-professional SEOs. My goal is to simplify some of the jargon & data so that non-professionals can use it effectively. I fact-checked all statements against Google statements & respected industry tests. However, if there are any facts or phrasings that you think are inaccurate, please contact me!

Read more about 5 Free Beginner SEO Tools From Google & How to Use Them.